Nvidia has moved to address growing KV cache capacity limits by standardizing the offload of inference context to NVMe SSDs through its new Inference Context Memory Storage Platform (ICMSP). Announced at CES 2026, ICMSP extends GPU KV cache into NVMe-based storage and is backed by Nvidia’s NVMe storage partners.

In large language model inference, the KV cache stores context data – the keys and values that represent relationships between tokens as a model processes input. As inference progresses, this context grows as new token parameters are generated, often exceeding available GPU memory. When older entries are evicted and later needed again, they must be recomputed, increasing latency. Agentic AI and long-context workloads amplify the problem by expanding the amount of context that must be retained. ICMSP aims to mitigate this by making NVMe-resident KV cache part of the context memory address space and persistent across inference runs.

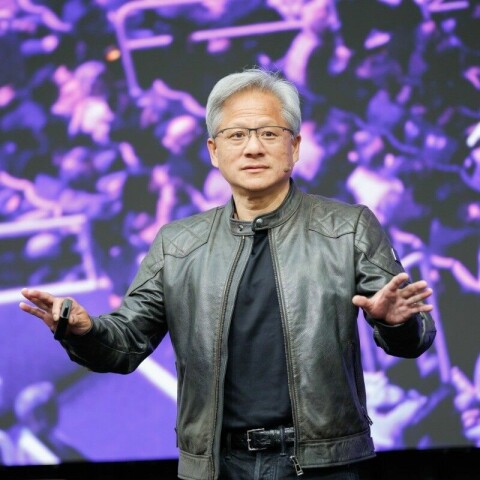

Nvidia CEO and founder Jensen Huang said: “AI is revolutionizing the entire computing stack – and now, storage. AI is no longer about one-shot chatbots but intelligent collaborators that understand the physical world, reason over long horizons, stay grounded in facts, use tools to do real work, and retain both short and long-term memory. With BlueField-4, Nvidia and our software and hardware partners are reinventing the storage stack for the next frontier of AI.”

During his CES presentation, he said that with BlueField-4, there is a KV cache context memory store right in the rack.

As AI models scale to trillions of parameters and multistep reasoning, they generate vast amounts of context data, and many such models will be running at the same time. The KV cache software – ICMSP – has to apply to GPUs, GPU servers, and racks of GPU servers, which can be running many different simultaneous inference workloads. Each model/agent workload’s parameter set has to be managed and made available to the right AI model or agent running in the right GPUs, which may change as jobs are scheduled. This means there is a KV cache context metadata management task.

Nvidia says ICMSP boosts KV cache capacity and accelerates the sharing of context across clusters of rack-scale AI systems. Persistent context for multi-turn AI agents improves responsiveness, increases AI factory throughput, and supports efficient scaling of long-context, multi-agent inference.

ICMSP relies on Rubin GPU cluster-level cache capacity and Nvidia’s coming BlueField-4 DPU with a Grace CPU and up to 800 Gbps throughput. BlueField-4 will provide and manage hardware-accelerated cache placement to eliminate metadata overhead, reduce data movement and ensure secure, isolated access from the GPU nodes. Nvidia software products – such as the DOCA framework, Dynamo KV cache offload engine, and its included NIXL (Nvidia Inference Transfer Library) software – provide smart, accelerated sharing of KV cache across AI nodes.

Dynamo works across a memory and storage hierarchy, from a GPU’s HBM, through a GPU server CPU’s DRAM, to direct-attached NVMe SSDs and networked external storage. Nvidia’s Spectrum-X Ethernet is also needed, offering a high-performance network fabric for RDMA-based access to AI-native KV caches. Overall, Nvidia says, ICMSP will provide up to 5x greater power efficiency than traditional storage and enable up to 5x higher tokens-per-second counts.

It lists many storage partners that will support ICMSP with BlueField-4, which will be available in the second half of 2026. The initial partner list includes AIC, Cloudian, DDN, Dell Technologies, HPE, Hitachi Vantara, IBM, Nutanix, Pure Storage, Supermicro, VAST Data, and WEKA. We expect NetApp, Lenovo, and Hammerspace will join them.

Comment

The general architectural elements idea of offloading or extending the KV cache to NVMe SSDs has already been implemented by, for example, Hammerspace, with its Tier zero tech, VAST Data with its open source VAST Undivided Attention (VUA) software, and WEKA with its Augmented Memory Grid. Dell also provides KV cache offload to its PowerScale, ObjectScale and Project Lightning (private preview) storage by integrating technologies like LMCache and NIXL with the three storage engines.

These are BlueField-3 era offerings. In effect, Nvidia is now aiming to provide a standardized KV cache memory extension framework to all its storage partners. Dell, IBM, VAST and WEKA have already said they will support ICMSP. A WEKA blog, The Context Era Has Begun, explains how it will do this and why. It says that ICMSP is “a new class of AI-native infrastructure designed to treat inference context as a first-class platform resource. This architectural direction is aligned with WEKA’s Augmented Memory Grid, which extends GPU memory to enable limitless, fast, efficient, reusable context at scale.”

WEKA’s Jim Sherhart, VP for Product Marketing, says: “Applying heavyweight durability, replication, and metadata services designed for long-lived data, introduces unnecessary overhead – increasing latency and power draw while degrading inference economics.

“Inference context still needs the right controls, but it doesn’t behave like enterprise data, and it shouldn’t be forced through enterprise-storage semantics. Traditional protocols and data services introduce overhead (metadata paths, small-IO amplification, durability/replication defaults, multi-tenant controls applied in the wrong place) that can turn ‘fast context’ into ‘slow storage.’ When context is performance-critical and frequently reused, that overhead shows up immediately as higher tail latency, lower throughput, and worse efficiency.”

VAST Data says its storage/AI operating system (AI OS) will run on the BlueField-4 processor and “collapses legacy storage tiers to deliver shared, pod-scale KV cache with deterministic access for long-context, multi-turn and multi-agent inference.”

John Mao, VP Global Technology Alliances at VAST, said:“Inference is becoming a memory system, not a compute job. The winners won’t be the clusters with the most raw compute – they’ll be the ones that can move, share, and govern context at line rate. Continuity is the new performance frontier. If context isn’t available on demand, GPUs idle and economics collapse. With the VAST AI Operating System on Nvidia BlueField-4, we’re turning context into shared infrastructure – fast by default, policy-driven when needed, and built to stay predictable as agentic AI scales.”

A VAST Data blog, More Inference, Less Infrastructure: How Customers Achieve Breakthrough Efficiency with VAST Data and Nvidia, discusses the company’s approach.

Update

Hammerspace’s CMO, Molly Presley, tells us: “Inference Context Memory is exactly the kind of GPU-side data and metadata pipeline Hammerspace was built for. We are actively working with Nvidia on BlueField-4 and we have a program in plan to support it as part of our roadmap this year. Hammerspace already delivers the core requirement — getting the right data to the right GPU at the right time — and ICM is a natural extension of that for inference-time state, KV caches, and context reuse at scale.”

IBM Fellow, CTO and VP for IBM Storage, Vincent Hsu, writes in an IBM blog: “IBM is working with Nvidia to combine advanced storage and networking technologies to unlock scalable, high-performance LLM inference. By integrating IBM Storage Scale’s global namespace and locality-aware placement with the Nvidia Inference Context Memory Storage Platform powered by BlueField-4 on the Nvidia Rubin platform, it delivers low-latency KV cache access, efficient resource utilization, and reduced TCO. This new IBM Storage Scale solution is purpose built for next-generation AI deployments on Dynamo.”

MinIO tells us it has nothing to say about Nvidia’s ICMSP at this time.

NetApp says: “NetApp and NVIDIA have an extensive partnership. NetApp will support the new BlueField-4-based architectures for AI workloads.”